Handling sudden traffic spikes is crucial to maintain your website’s performance, reliability, and user experience. Here’s a comprehensive guide on strategies to manage traffic surges effectively, including the role of load balancers like NodeBalancer.

1. Implement Load Balancing

What is Load Balancing?

Load balancing distributes incoming network traffic across multiple servers to ensure no single server becomes a bottleneck. This enhances the availability and reliability of your website.

How Do Load Balancers Help?

- Scalability: Automatically distribute traffic to additional servers as demand increases.

- Redundancy: If one server fails, traffic is rerouted to healthy servers, minimizing downtime.

- Optimal Resource Utilization: Ensures all servers are used efficiently, preventing overloading.

NodeBalancer and Its Benefits

NodeBalancer is a specific type of load balancer offered by providers like Linode. It manages traffic distribution among your nodes (servers), offering features such as:

- Health Checks: Regularly monitors server health and removes unhealthy nodes from the pool.

- SSL Termination: Offloads SSL processing from your servers, improving performance.

- Session Persistence: Ensures user sessions are consistently directed to the same server if needed.

Do NodeBalancers Really Help?

Yes, NodeBalancers (and load balancers in general) are highly effective in managing traffic spikes. They ensure that your website remains responsive by evenly distributing traffic, reducing the risk of server overloads, and providing high availability.

2. Auto-Scaling

What is Auto-Scaling?

Auto-scaling automatically adjusts the number of active servers based on current traffic demands. It can scale out (add more servers) during high traffic and scale in (remove servers) when traffic decreases.

Benefits:

- Cost-Efficiency: Pay only for the resources you need.

- Flexibility: Quickly respond to traffic changes without manual intervention.

- Improved Performance: Maintain optimal performance levels during traffic surges.

Implementation Tips:

- Set Thresholds: Define CPU usage, memory usage, or request rates that trigger scaling actions.

- Use Cloud Services: Platforms like AWS, Google Cloud, and Azure offer robust auto-scaling solutions.

- Monitor and Optimize: Continuously monitor performance and adjust scaling policies as needed.

3. Content Delivery Network (CDN)

What is a CDN?

A CDN is a network of geographically distributed servers that cache and deliver web content to users from the nearest location.

Benefits:

- Reduced Latency: Faster content delivery by serving data from locations closer to users.

- Offloaded Traffic: Decreases the load on your origin server by handling static content delivery.

- Improved Reliability: Distributes traffic across multiple servers, enhancing availability.

Popular CDNs:

- Cloudflare

- Akamai

- Amazon CloudFront

- Fastly

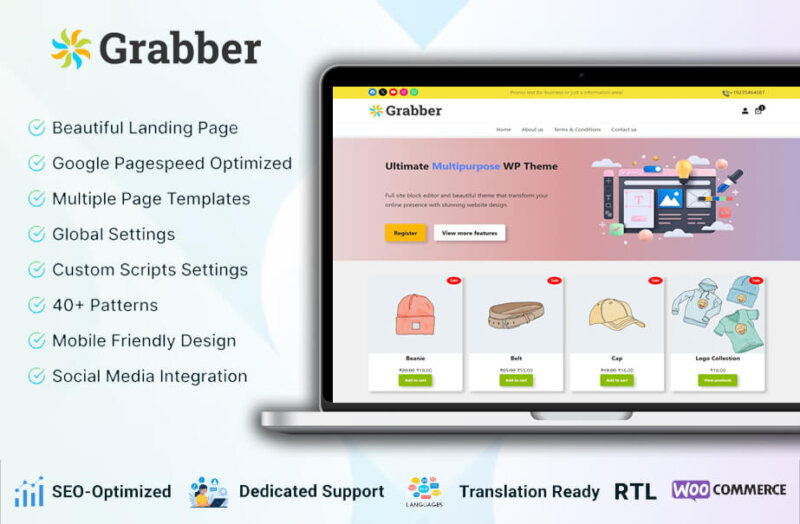

If you are using WordPress Check our best Optimize theme

4. Caching Strategies

Why Caching Matters:

Caching stores frequently accessed data temporarily to reduce the need for repeated processing or database queries, significantly speeding up response times.

Types of Caching:

- Browser Caching: Stores static assets (images, CSS, JavaScript) on the user’s browser.

- Server-Side Caching: Utilizes in-memory stores like Redis or Memcached to cache dynamic content.

- Edge Caching: Caches content at the CDN edge servers for faster access.

Benefits:

- Reduced Server Load: Fewer requests reach your origin servers.

- Faster Load Times: Users experience quicker page loads.

- Cost Savings: Lower bandwidth and server resource usage.

5. Optimize Application Performance

Key Areas to Optimize:

- Database Queries: Optimize and index queries to reduce latency.

- Code Efficiency: Refactor code to improve execution speed and resource usage.

- Asynchronous Processing: Offload long-running tasks to background processes.

- Minimize HTTP Requests: Combine files and use techniques like lazy loading to reduce the number of requests.

Tools for Optimization:

- Profilers: Identify performance bottlenecks in your application code.

- Monitoring Tools: Use tools like New Relic, Datadog, or Grafana to monitor performance metrics.

- Automated Testing: Implement performance testing to ensure your application can handle expected traffic loads.

6. Implement Rate Limiting and Throttling

What is Rate Limiting?

Rate limiting restricts the number of requests a user or IP address can make within a specified time frame.

Benefits:

- Prevents Abuse: Protects against DDoS attacks and malicious traffic.

- Ensures Fair Resource Usage: Prevents a single user from monopolizing server resources.

- Maintains Performance: Keeps the website responsive for all users during traffic spikes.

Implementation Tips:

- Set Appropriate Limits: Balance between preventing abuse and not hindering legitimate users.

- Use Middleware or API Gateways: Implement rate limiting at the application or network layer.

- Monitor and Adjust: Regularly review and adjust limits based on traffic patterns.

7. Prepare for Failover and Redundancy

What is Failover?

Failover is the ability to switch to a standby system or server in case of a failure.

Benefits:

- Increased Reliability: Minimizes downtime by quickly switching to backup systems.

- Data Redundancy: Ensures data is replicated across multiple servers or locations.

- Business Continuity: Maintains operations during unexpected outages.

Implementation Tips:

- Geographical Redundancy: Distribute servers across different data centers or regions.

- Regular Testing: Periodically test failover mechanisms to ensure they work as expected.

- Automated Monitoring: Use monitoring tools to detect failures and trigger failover automatically.

8. Use Efficient Web Technologies

Choose Scalable Frameworks:

Select web frameworks and technologies known for scalability and performance, such as Node.js, Go, or scalable PHP frameworks.

Implement Microservices Architecture:

Break down your application into smaller, independent services that can scale individually based on demand.

Leverage Serverless Computing:

Use serverless platforms like AWS Lambda or Google Cloud Functions to handle specific tasks without managing servers, allowing automatic scaling based on usage.

Conclusion

Handling sudden traffic spikes requires a multi-faceted approach that includes load balancing, auto-scaling, caching, optimizing application performance, and ensuring redundancy. Load balancers like NodeBalancer are integral to this strategy, as they distribute traffic efficiently across multiple servers, enhance reliability, and support scalability. By implementing these strategies, you can ensure your website remains robust and responsive, even during unexpected surges in traffic.

If you’re considering using NodeBalancer or any load balancing solution, evaluate your specific needs, infrastructure, and budget to choose the best option for your website. Combining load balancing with other optimization techniques will provide the most effective defense against traffic spikes.

Leave a Reply